Table of Contents

Introduction

Subtitle Edit is a free, open-source subtitle editor for Windows (with Linux support) that has powerful audio-to-text capabilities built in (Subtitle Edit 4.0.11 / 4.0.12 Beta Download Free – VideoHelp). This means you can automatically transcribe video or audio into subtitles using AI – a game-changer for content creators and translators. Recent versions of Subtitle Edit support two major speech recognition engines: OpenAI Whisper and Vosk/Kaldi. Both allow offline, AI-driven subtitle generation, but they differ in accuracy, speed, and hardware requirements. In this guide, we’ll explore how to use both Whisper and Vosk in Subtitle Edit step-by-step.

Whisper vs. Vosk – What’s the difference? Whisper is an advanced neural network model released by OpenAI in 2022, trained on 680,000 hours of multilingual data (Introducing Whisper | OpenAI). It “approaches human level robustness and accuracy” on English speech recognition (Introducing Whisper | OpenAI), which makes it extremely accurate for transcribing spoken dialogue. It can handle many languages and even translate speech to English. However, Whisper’s high accuracy comes at the cost of heavy computation – larger Whisper models are slow on CPU and ideally need a capable GPU (the largest model has ~1.5 billion parameters and requires ~10 GB of VRAM (GitHub – openai/whisper: Robust Speech Recognition via Large-Scale Weak Supervision)). Vosk, on the other hand, is an offline speech-to-text toolkit based on the Kaldi ASR project. Vosk models are generally smaller and faster on a CPU than Whisper, making them a good choice for less powerful PCs or quick transcriptions. For example, Vosk offers “small” 50 MB models (requiring ~300 MB RAM) that can even run on Raspberry Pi, and “large” models (~2 GB) for higher accuracy that might use up to 16 GB RAM on a high-end CPU (VOSK Models). In practice, users find Whisper often produces more accurate transcripts than Vosk, especially for diverse accents or technical content, whereas Vosk can transcribe faster. One user noted that transcribing a 50-minute video with Vosk’s English model took ~20 minutes, while Whisper’s medium model (more accurate) took about 77 minutes on the same PC (STILL Can’t install Faster-Whisper-XXL · Issue #8690 · SubtitleEdit/subtitleedit · GitHub). Whisper nailed proper nouns and complex terms with “near-perfect” accuracy, whereas Vosk’s output needed more editing (STILL Can’t install Faster-Whisper-XXL · Issue #8690 · SubtitleEdit/subtitleedit · GitHub). In short: Whisper is usually more accurate and language-flexible, and Vosk is typically faster and lighter on resources. We’ll cover how to set up and use both so you can decide which fits your needs.

(SEO note: subtitle edit whisper vosk, automatic subtitle generation, speech recognition subtitles)

System Requirements & Setup

Before diving in, ensure your PC meets the requirements for smooth operation:

- Operating System: Windows 10 or 11 (Subtitle Edit is a .NET application (Subtitle Edit 4.0.11 / 4.0.12 Beta Download Free – VideoHelp); Windows 7/8 may work but could have compatibility issues with newer features). Make sure you have the .NET Framework 4.8 or later installed (this is required to run Subtitle Edit on Windows (Subtitle Edit 4.0.11 / 4.0.12 Beta Download Free – VideoHelp)).

- CPU: A modern multi-core processor. For Vosk, even an Intel Core i3/i5 can handle the small models. For Whisper, a faster CPU (Core i7/Ryzen 7 or above) is recommended, especially for the larger models. Whisper’s largest model is very slow on a CPU – if you plan to use Whisper-Large, consider a machine with a high-end CPU or use a GPU.

- GPU (Optional): Highly recommended for Whisper. If you have an NVIDIA GPU, Subtitle Edit can leverage it via certain Whisper backends (like Faster Whisper or CTranslate2) to greatly speed up transcription. A GPU with at least 10–12 GB VRAM is needed for the biggest Whisper model (GitHub – openai/whisper: Robust Speech Recognition via Large-Scale Weak Supervision). Smaller models can run on GPUs with 4–8 GB. (Vosk currently runs on CPU only, but it’s efficient enough not to need a GPU).

- RAM: At least 8 GB for basic use. For Whisper medium or large models, 16 GB or more system RAM is better, since the model files themselves are large (around 1.5–2.9 GB) and transcription will load a lot into memory. Vosk’s big English model (~2 GB file) can also consume a few GB of RAM when running (VOSK Models). Ensure you have disk space for model files (several GB).

- Audio/Video Dependencies: Subtitle Edit uses FFmpeg and a media player (mpv) to handle video/audio. The first time you try to use the audio-to-text feature, Subtitle Edit will prompt you to download FFmpeg if it’s not already present (Tutorial: How to run Whisper via Subtitle Edit – Luis Damián Moreno García (PhD, FHEA)) (Automatically Generate Subtitles and Translations with Subtitle Edit). This is required to extract audio from your video. Similarly, when opening a video, it may ask to download the mpv player for playback – allow it to do so for video preview and waveform display. All these downloads are automatic through the Subtitle Edit interface.

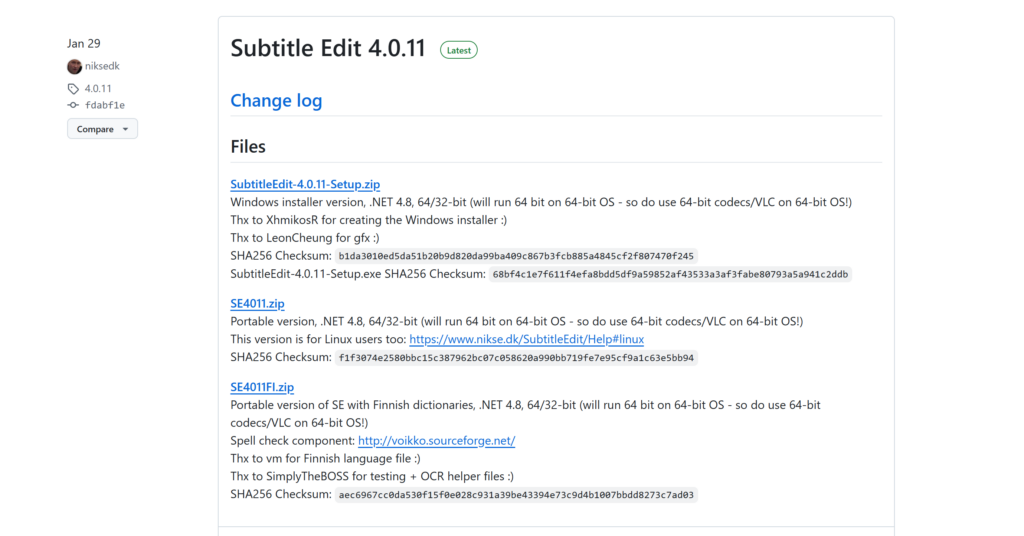

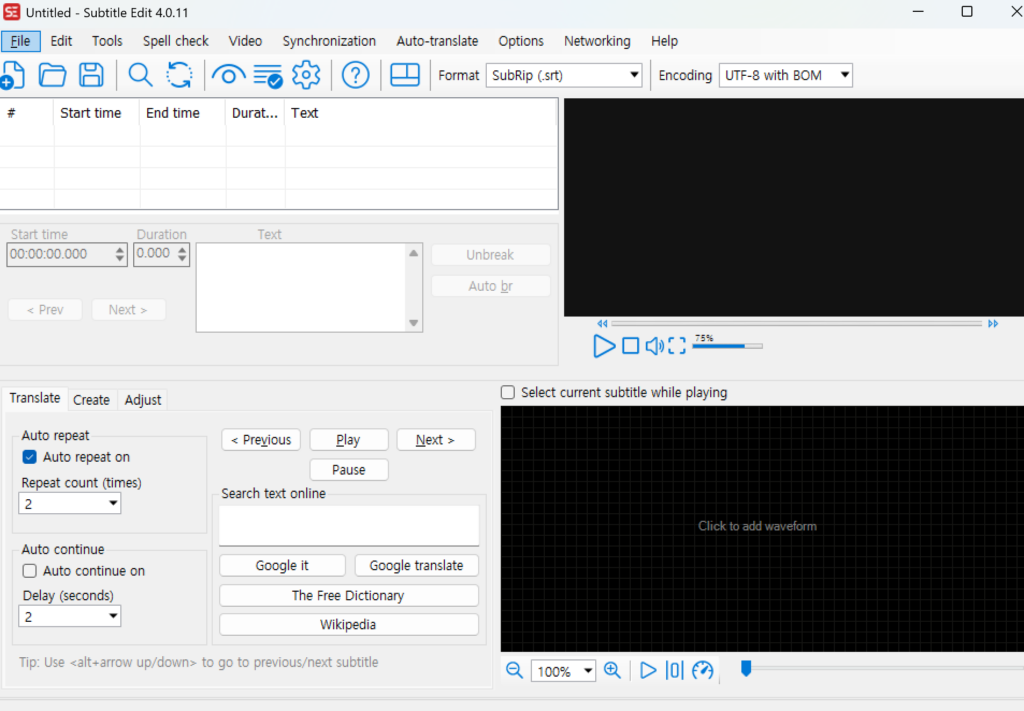

Installing Subtitle Edit: If you haven’t already, download the latest version of Subtitle Edit from the official website (or its GitHub releases page). Run the installer and follow the prompts (it’s a straightforward installation), or use the portable ZIP version if you prefer (just unzip it to a folder). On first launch, Subtitle Edit’s interface will appear. You’ll see a menu bar, a blank list for subtitle lines, and empty video and waveform panels. We’ll fill those in soon!

Downloading Whisper and Vosk models: The speech recognition engines require model files to work. Subtitle Edit will guide you to obtain these when needed:

- Whisper models: Subtitle Edit supports multiple Whisper backends (OpenAI’s default, Whisper.cpp, Faster Whisper, etc.). The simplest approach for beginners is to use the default Whisper implementation or the Faster Whisper engine, which will automatically prompt to download a model of your choice. When you first use Whisper in Subtitle Edit, after selecting the engine and language, you’ll get a dropdown to choose a model (e.g., tiny, base, small, medium, large). If the model isn’t already on your PC, you can click the “Download” prompt (Subtitle Edit will fetch the model from the internet for you) or use the “Open models folder” link to see where to place model files (Tutorial: How to run Whisper via Subtitle Edit – Luis Damián Moreno García (PhD, FHEA)) (Tutorial: How to run Whisper via Subtitle Edit – Luis Damián Moreno García (PhD, FHEA)). For example, if you select medium.en, the program may ask to download a ~1.5 GB file – confirm and let it download. (Tip: Start with smaller models first to test the setup, as they download faster. You can always add larger models later. Whisper’s “.en” models are English-only and a bit more accurate for English audio (GitHub – openai/whisper: Robust Speech Recognition via Large-Scale Weak Supervision).) In case the auto-download doesn’t work due to network issues, you can manually download Whisper models from the official Hugging Face repository and place them in the

%AppData%/Subtitle Edit/Whisperfolder (or the folder shown by “Open models folder”). But in most cases, Subtitle Edit’s built-in downloader should handle it. - Vosk models: When you use the Vosk/Kaldi speech recognition for the first time, Subtitle Edit will prompt to download the Vosk engine (the underlying

libvosklibrary) and then the actual language model. For example, if your audio is in English, it will suggest downloading a model like vosk-model-en-us-0.22. Just click OK on these prompts to let Subtitle Edit fetch the files (Automatically Generate Subtitles and Translations with Subtitle Edit). After the download, it may ask you to select the model or language – choose the appropriate language for your audio (e.g., English). The model is usually a zip file around 1.8 GB for the default large English model. Subtitle Edit will handle unzipping and installation. (If the automatic download fails for some reason, you can manually download a Vosk model from the official list and in Subtitle Edit click the “…” or Open models folder to locate it. The default location is typically%AppData%/Subtitle Edit/Voskfor Windows. Place the unzipped model folder there.)

With Subtitle Edit installed and the required components in place (FFmpeg, mpv, and your chosen speech model files), you’re ready to generate subtitles using AI. Next, we’ll go through the process step by step.

Step-by-Step Subtitle Generation

Now let’s walk through using Subtitle Edit to automatically transcribe a video. We’ll cover both Whisper and Vosk methods. The process is similar for both, with just a couple of differences in the settings:

- Launch Subtitle Edit and open your media file. Start Subtitle Edit and go to the top menu bar. Click Video > Open video file… to load the video or audio you want to transcribe. Navigate to your file (common formats like MP4, MKV, MP3, WAV are supported) and open it. The video will appear in the top-right preview pane, and its waveform can be shown at the bottom (if not visible, you can enable the waveform via Video > Show/hide waveform). Ensure the file is playing correctly (you can press the play button to test audio).

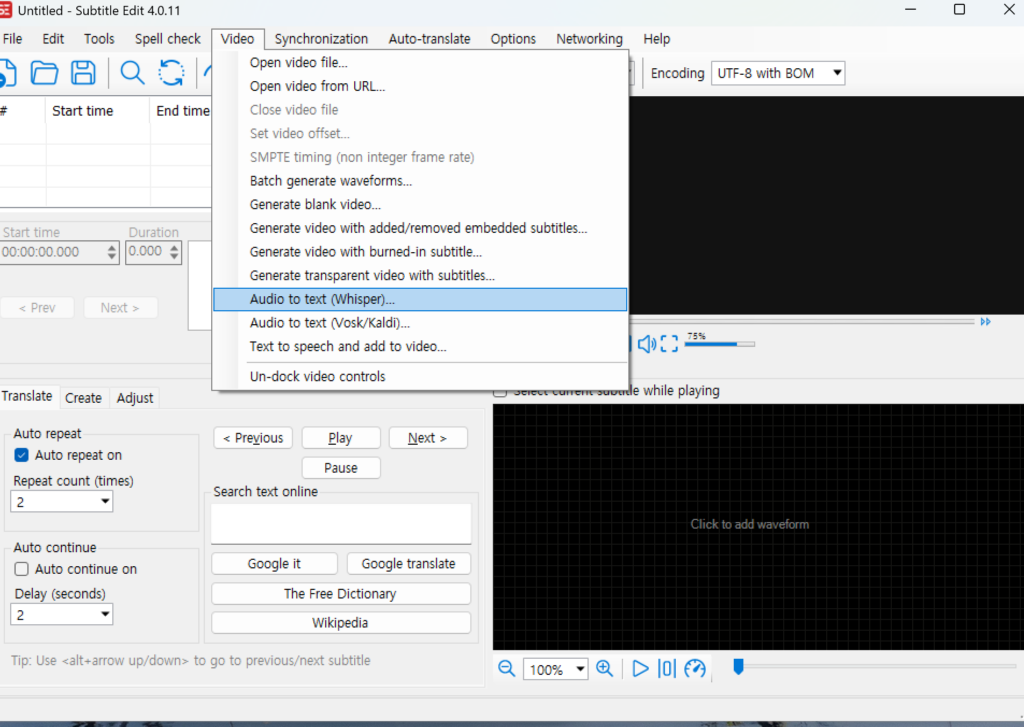

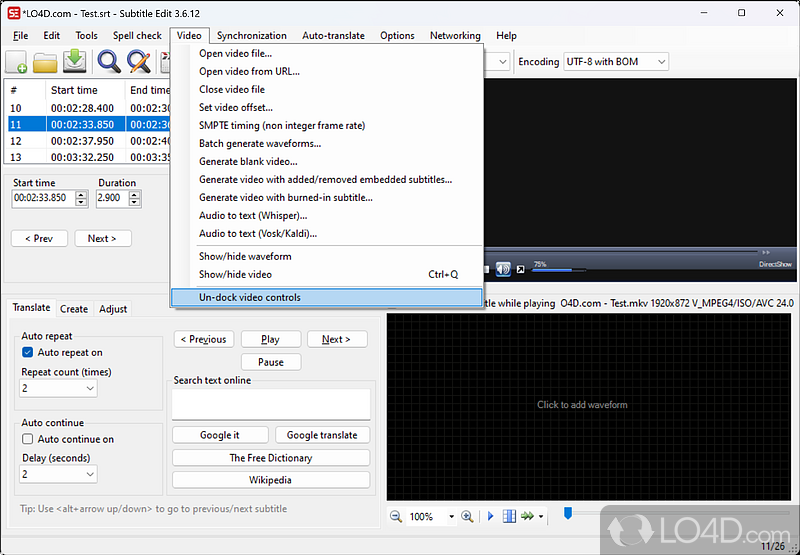

- Access the Audio-to-Text feature and choose Whisper or Vosk. With the video loaded, open the Video menu again. You will see two options for audio transcription: “Audio to text (Whisper)…” and “Audio to text (Vosk/Kaldi)…”. Choose the one you want to use. (Subtitle Edit – Screenshots) Subtitle Edit’s “Video” menu, where you open files and choose the Audio to text function (Whisper or Vosk/Kaldi) for AI transcription.

- Configure language and model settings. In the Audio to Text dialog, you’ll configure how the transcription will run. Let’s break it down for each engine:

- Using Whisper: At the top of the dialog, you’ll see a dropdown for Engine. Subtitle Edit supports multiple Whisper implementations. For beginners, select “OpenAI” (the default Whisper) or “Purfview’s Faster-Whisper” if available, as these are straightforward. Faster-Whisper is an optimized version that runs faster and uses less VRAM (Tutorial: How to run Whisper via Subtitle Edit – Luis Damián Moreno García (PhD, FHEA)), so it’s great if you have a GPU or want quicker results. Next, choose the Language of the audio. For example, select “English” if your video’s spoken language is English. Then choose a Model from the list – e.g. medium.en (1.5 GB) for a balance of speed and accuracy, or small.en for faster but slightly less accurate results. (If you want maximum accuracy and have a strong PC or GPU, you could try large). If the model file isn’t found, click the “… (browse)” or “Download” button that appears to fetch it. Make sure “Auto adjust timings” is checked – this lets Subtitle Edit automatically adjust subtitle timing for you (splitting or merging as needed for readability). Also keep “Use post-processing (line merge, fix casing, punctuation, and more)” checked – this option will intelligently merge short lines, split overly long lines, and apply basic punctuation/capitalization fixes to the raw transcription. You can click the Settings link next to it to fine-tune these rules (for example, how short is “too short” for a line merge) or just use the defaults. Finally, you can optionally use the “Advanced” button to set Whisper-specific parameters (like beam search size or temperature), but this is not necessary for beginners – leaving default (or a preset like “–standard”) is fine. Your dialog should look similar to this: (Using Subtitle Edit to create amazing audio to text SRT transcripts – St-Cyr Thoughts) The Whisper audio-to-text dialog in Subtitle Edit. Here, Engine is set to Faster-Whisper, language to English, and the medium.en model is selected. Notice the options to auto-adjust timing and post-process the output for better formatting.

- Using Vosk: The Vosk audio-to-text dialog is a bit simpler. You may not have multiple “engine” options since Vosk is a specific engine (there might just be a label or dropdown for language model). Ensure the Language/Model is set correctly (Subtitle Edit might auto-select the model you downloaded, e.g., English). If you have multiple Vosk models installed, you might choose between a small or large model here. There are typically fewer settings – Vosk will segment the subtitles as it transcribes. Still, you should see an option for “Auto adjust timings” and “Use post-processing” similar to Whisper – keep those enabled for better results (these features work for both engines). Vosk doesn’t provide translation to English (it transcribes in the source language only), so the dialog will not have a “Translate to English” option unless you chose a translation mode specifically.

- Add the file to the input list (if needed). In the Whisper dialog, there is an Input section where you can add files. If your video wasn’t automatically added, click the Add… button and select your video/audio file (you should see the filename appear in the list). Usually, if you opened the video in step 1, Subtitle Edit will pre-load it into this list for you. You can actually add multiple files here to run a batch transcription one after another (there is also a Batch mode button for processing files in bulk). For now, ensure your one target file is in the input list. Vosk’s dialog works similarly – make sure your file is listed as the input.

- Start the transcription. Everything is set – now click the Generate button to begin the AI transcription. Subtitle Edit will begin processing the audio. A progress bar will appear, and you’ll likely see messages logging the progress (you can press F2 to show a log window if you want detailed output). This may take some time depending on your file length, chosen model, and hardware. For example, Whisper medium on a CPU might transcribe at about 0.5x–1x real-time (a 10-minute video could take 10–20 minutes). With a GPU or a small model, it will be faster. Vosk usually works faster on CPU – perhaps 2x real-time or more depending on model. While it runs, you can monitor CPU/GPU usage on your system – Whisper tends to be CPU-intensive (or GPU-intensive if using one). Don’t be alarmed if it takes a while; longer videos especially will need patience. (Note: Subtitle Edit might seem unresponsive during processing – let it finish. In Whisper’s case, the progress bar might not estimate remaining time perfectly. In Vosk’s case, you might see a more immediate progress.)

- Review the generated subtitles. Once complete, the dialog will close and you’ll return to the main Subtitle Edit window, now filled with subtitle lines! Each line will have start/end timestamps and the transcribed text. Play back your video (press the play button or hit the spacebar) to see the subtitles in action. They should appear in sync with the dialogue. It’s important to proofread the text: even the best AI can make transcription errors or punctuation mistakes. Whisper’s output, for example, will include punctuation and capitalization, and with the post-processing on, you’ll see that Subtitle Edit capitalized the first letter of sentences and added periods where appropriate. Vosk’s output might be all lower-case and without punctuation (depending on version), since it focuses on raw transcription – Subtitle Edit’s post-processing can add basic capitalization but you might need to add punctuation. Read through and correct any errors by clicking on a subtitle line and editing the text in the text box. Also check if any subtitle is too long to read comfortably; Subtitle Edit’s post-processor usually splits or merges lines to optimal lengths, but it’s good to double-check. You can use the wavefrom display to visually verify that subtitle boundaries align with the speech. Adjust timing if needed (Subtitle Edit has tools to nudge or split lines). Overall, if you used Whisper with a decent model, you may be pleasantly surprised at how accurate the transcription is – minimal editing might be needed (Using Subtitle Edit to create amazing audio to text SRT transcripts – St-Cyr Thoughts) (STILL Can’t install Faster-Whisper-XXL · Issue #8690 · SubtitleEdit/subtitleedit · GitHub). Vosk users might need a bit more manual correction, especially for names or unusual terms.

- Save the subtitles. Finally, save your generated subtitles. Go to File > Save As and choose a format – the common choice is SubRip (.srt) which is widely supported. You can also choose WebVTT (.vtt), Advanced Substation Alpha (.ass), or any of the 250+ formats Subtitle Edit supports. Give it a filename (usually matching your video name is wise, e.g., MyVideo_en.srt). Now you have an subtitles file automatically created! You can use it as-is or refine it further in Subtitle Edit.

- (Optional) Translate or export with video: If needed, Subtitle Edit can also auto-translate the subtitles to another language via Google Translate or DeepL (in the menu Auto-translate). And if you want to burn-in the subtitles into the video, you can use Video > Generate video with burned-in subtitles after you’re done editing. These are beyond our main scope, but it’s good to know the tool has these additional features.

By following these steps, you’ve leveraged AI to generate subtitles in a fraction of the time it would take to type them manually. Next, we’ll address some common issues and tips to get the best results.

Troubleshooting & Optimization Tips

Even with the above instructions, you might encounter a few hiccups or want to optimize performance. Here are some common issues and tips:

- Whisper model download issues: If Whisper models fail to download within Subtitle Edit (it can happen due to timeout or network issues), remember you can download the model files manually. The developer of Subtitle Edit provided direct links on the help page for models (Whisper model doesn’t download · Issue #6584 · SubtitleEdit/subtitleedit · GitHub). For example, you can download

medium.en.pt(for Whisper) from an official source and place it in the%AppData%/Subtitle Edit/Whisperfolder. After doing so, Subtitle Edit should detect it (you can use “Open models folder” to verify the file is there). If using the Whisper backend that relies on the OpenAI Python module, ensure you have Python installed and the Whisper package (pip install openai-whisper) – however, in latest versions, the C++ based models are preferred and do not require separate Python installation. - Vosk not starting or “Try again later” error: This usually means the Vosk model failed to download or load. The first time, Subtitle Edit downloads

libvosk.dlland the model. If something went wrong, you might see an error “unable to complete download, try again later” (Speech to Text in Subtitle Edit 3.6.5 and forward – VideoHelp Forum) (Speech to Text in Subtitle Edit 3.6.5 and forward – VideoHelp Forum). To fix this, you can manually download the Vosk model and put it in the correct folder as described in the setup. Ensure the folder structure is correct (e.g.,vosk-model-en-us-0.22folder containsconfandmodelsubfolders). Then restart Subtitle Edit and try again. Also, go to Options > Settings > Waveform and make sure “Use FFmpeg for wave extraction” is enabled (this ensures the audio is fed correctly to Vosk). If you’re on Windows 7 and having SSL issues downloading, there is a known issue – updating your .NET framework or using a newer Windows can solve it (Subtitle Edit 3.6.10 new version with Whisper option – VideoHelp Forum). - CPU running very slow / high usage: Transcription is CPU-intensive. If Whisper is unbearably slow on your machine, consider using a smaller model. For instance, if you tried

largeand it’s crawling, switch tobaseorsmall– you will lose some accuracy, but it might be worth the speed gain. You can also try the Whisper CPP or Faster Whisper engines, which are optimized in C++ and can be faster than the default Python implementation. One forum user noted that using Whisper’s large model on an i5 CPU was not feasible (“large model is not going to work” without a powerful GPU) (Steps & Settings for use of Whisper in Subtitle Edit ? – VideoHelp Forum). So don’t overload your system – pick the model size appropriate for your hardware. On the flip side, if accuracy is paramount, run the bigger model and just be patient (or offload to a better machine). You can monitor progress by pressing F2 for log – you’ll at least see that it’s transcribing chunk by chunk. - GPU not being used (Whisper): Subtitle Edit doesn’t automatically use the GPU unless you select an engine that supports it. For example, Const-me’s Whisper or Faster Whisper (with GPU) will utilize your NVIDIA GPU via CUDA. Ensure you have the necessary CUDA/CuDNN libraries if required. If you have installed the Whisper model but it’s still using CPU, check Reddit or forum threads for guidance on enabling GPU. In many cases, the Faster Whisper option will detect the GPU and use it. You might also try CTranslate2 engine in the dropdown, which can use GPU for inference. When correctly set, you should see your GPU usage spike during transcription (and CPU usage drop). This can speed up large model transcription from, say, 8x realtime on CPU to 0.5x realtime on a good GPU – a massive improvement. In short, if you have a decent GPU, leverage it!

- Accuracy issues / weird transcription output: If the generated text has many errors or odd segments, consider these factors: (a) Is the audio clear? Background noise or heavy accents can still trip up models. Whisper is trained for robustness to some noise (Introducing Whisper | OpenAI), but it’s not magic – bad input yields bad output. You might preprocess the audio (e.g., use Audacity to reduce noise) for better results. (b) Choose the correct language manually. Whisper can auto-detect language, but in Subtitle Edit you typically specify it – make sure it matches the spoken language. (c) Try a larger model for better accuracy. If you used

tinyorbaseand got a lot of mistakes, step up tosmallormedium. The trade-off is speed. Also, ensure post-processing was on – it can significantly improve formatting (it won’t change word accuracy, but it makes the output more readable by adding punctuation and casing, so you can understand the text better to correct it). (d) For multi-speaker audio or overlapping speech, both engines might struggle. You may need to manually split subtitles if two people talk simultaneously (the AI will merge them). - Subtitle segmentation and timing: Whisper and Vosk handle timing differently. Vosk (Kaldi) tends to produce more finely segmented subtitles with precise timing per phrase (Enhanced audio-to-text comparison: VOSK vs Whisper in Subtitle Edit), which is good for accuracy but sometimes yields very short lines. Whisper (especially via some implementations) might output a continuous block of text with fewer segments (Enhanced audio-to-text comparison: VOSK vs Whisper in Subtitle Edit). Subtitle Edit’s post-processing usually breaks those into reasonable subtitle lines. If you find Whisper’s subtitles are too long time-wise (covering 30 seconds in one subtitle, for example), you can use the Split by sentences feature or simply enable the splitting options in post-processing (which we did). On the other hand, if subtitles are too granular (too many), you can merge lines that are too short. The goal is to have each subtitle last 1–6 seconds and contain a full thought or sentence that a viewer can comfortably read. Use Tools > Merge lines or Duration limits to tidy up segmentation after the auto-transcription. Also, play the video and watch the subtitles – adjust the timing if a subtitle consistently appears a bit earlier or later than the speech. Minor shifts can be done by selecting all (Ctrl+A) and using Synchronization > Adjust all times if needed.

- Multilingual videos: Whisper can detect and transcribe multiple languages, but Subtitle Edit currently allows choosing one language at a time for transcription. If your video has sections in different languages, you might have to run the audio-to-text for each language separately (e.g., transcribe once with language set to Spanish for the Spanish parts, then again in English for the English parts). Alternatively, run it in one language (the dominant one) and be aware that other language parts will be gibberish – you’ll need to fix those manually or by re-running Whisper on just those segments. Vosk’s models are language-specific (it won’t handle mixed languages in one go). For translation (say you want English subtitles from a non-English speech), Whisper has a translation mode (task=translate) which Subtitle Edit’s Whisper interface can do if you tick “Translate to English”. Keep in mind translation may not be perfect; you might prefer transcribing in original language and then using the auto-translate feature separately.

- Common errors and fixes: If you see a lot of [inaudible] or [Music] tags, note that Whisper (the open-source version) doesn’t actually insert these – those usually come from services like YouTube. Whisper will try its best to transcribe everything, even background words. If something truly can’t be understood, it might just output a guess or nothing. You can manually insert tags like [inaudible] or [background music] if needed for clarity. For Vosk, if it produces some random words that don’t fit, it could be a sign it misheard due to noise. Edit those or re-run with a better model. Always save your work frequently once you start editing the subtitles manually, to avoid losing changes.

Optimizing for speed: If you need faster processing and have a capable CPU, using Vosk’s small model can be an option – though accuracy will drop, it might still be serviceable for simpler content. Also consider using batch mode in Subtitle Edit if you have many files; you can queue them up and let it run overnight. For Whisper, if you’re tech-savvy, you can also run the Whisper model directly via Python or use third-party wrappers to possibly utilize mixed precision or GPU more efficiently, but for most users the integration in Subtitle Edit is the easiest route. The Faster-Whisper engine is a good middle ground – one user observed that “Faster-Whisper is much faster & better” in some cases (Steps & Settings for use of Whisper in Subtitle Edit ? – VideoHelp Forum). It’s built on a more optimized inference library. So experiment with those engine options in the Whisper dialog (they are there for a reason – speed vs. accuracy tuning).

Additional Expert References

To give you confidence in these tools, here are some references and insights from experts and the community about Whisper, Vosk, and Subtitle Edit:

- OpenAI’s Whisper Research – Whisper was introduced by OpenAI in 2022. In their official release, OpenAI stated that “We’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.” (Introducing Whisper | OpenAI). The model’s ability to handle diverse accents and background noise was achieved by training on a massive dataset (Introducing Whisper | OpenAI). If you’re interested in the technical side, you can read the paper by Radford et al. (2022) (GitHub – openai/whisper: Robust Speech Recognition via Large-Scale Weak Supervision) which details Whisper’s Transformer architecture and performance across languages. Whisper’s high accuracy for English makes it a top choice for transcription when quality is paramount.

- Vosk and Kaldi ASR – Vosk is built on the Kaldi speech recognition toolkit, which has been a popular open-source ASR framework in academia. The Alpha Cephei team behind Vosk provides models for many languages. According to their published benchmarks, the large English Vosk model (Vosk 0.22) achieves about 5.69% word error rate on the standard Librispeech test set (VOSK Models), which is quite solid (for comparison, human transcription error on clean speech can be around 5% or less). This shows Vosk can be very accurate on clear audio. However, Whisper’s enormous training set gives it an edge on more varied “in the wild” audio. An independent tester compared Vosk vs Whisper in Subtitle Edit and noted Whisper performed better on real-world videos in terms of needing virtually “no edits required” in some cases (Using Subtitle Edit to create amazing audio to text SRT transcripts – St-Cyr Thoughts). Use cases may vary, though – Vosk might excel in scenarios where quick, near-real-time transcription is needed on-device (it’s even used in some mobile apps due to its lightweight models).

- User experiences and community tips – There’s an active community around Subtitle Edit. For example, the VideoHelp forums have threads where users share their experiences with the new Whisper integration. One user initially struggled with setup, but after upgrading and getting the models in place, they reported successful transcription of a full-length film (90 minutes) – it took about 8 hours on an older i5 CPU using Whisper, but the result was quite good (Steps & Settings for use of Whisper in Subtitle Edit ? – VideoHelp Forum) (Steps & Settings for use of Whisper in Subtitle Edit ? – VideoHelp Forum). Another user responded with advice that using a GPU dramatically improves Whisper’s speed, and to “stick to Whisper and NOT VOSK” for best accuracy in their opinion (Steps & Settings for use of Whisper in Subtitle Edit ? – VideoHelp Forum). On Reddit’s r/SubtitleEdit, users have discussed troubleshooting model downloads and using alternatives like faster-whisper. These anecdotal reports align with the general consensus: Whisper yields excellent transcripts (even for YouTube content, podcasts, etc.), while Vosk is a reliable fallback if you can’t use Whisper.

- Performance considerations – If you want to optimize further or use these engines outside Subtitle Edit: Whisper’s code is open-source (Tutorial: How to run Whisper via Subtitle Edit – Luis Damián Moreno García (PhD, FHEA)) and there are forks like Whisper.cpp (whisper in C++ that can run on CPU with lower memory) and Faster-Whisper (uses GPU, optimized kernels). Subtitle Edit actually supports these – the “Engine” dropdown includes options like “Whisper (CPP)” and “Whisper (Const-me GPU)” (Tutorial: How to run Whisper via Subtitle Edit – Luis Damián Moreno García (PhD, FHEA)). The community has found that Whisper CPP can be faster on CPU than the original, and Const-me’s DirectML version allows GPU usage even on AMD GPUs. So Subtitle Edit is quite extensible if you have specific needs. Meanwhile, Vosk models and code are also on GitHub and have been used in various AI transcription projects for offline use.

- Accuracy in different languages – Whisper’s strength is its multilingual support. It can transcribe and even translate between many languages. Vosk has separate models per language; some languages (like Danish, as a user pointed out (Whisper model doesn’t download · Issue #6584 · SubtitleEdit/subtitleedit · GitHub)) might not have a well-trained Vosk model, whereas Whisper can handle Danish with its general model. If your project involves languages beyond English, Whisper is likely the better choice. For English-only work, both engines are viable, but Whisper’s largest model will generally out-perform Vosk in transcription quality (with the caveat of needing more resources).

We’ve cited official documentation and user reports throughout this guide (see the inline references). These should give you further reading and assurance of the capabilities described. The combination of Subtitle Edit with Whisper or Vosk brings cutting-edge speech-to-text research right to your desktop in a user-friendly way.

Conclusion

In summary, Subtitle Edit’s automatic subtitle generation with Whisper and Vosk can save you countless hours. We introduced Subtitle Edit as a powerful, beginner-friendly tool and compared the two AI engines: Whisper (from OpenAI, with superior accuracy and multi-language support) and Vosk (an efficient offline recognizer with faster CPU performance). We covered how to install and configure everything on Windows, including hardware recommendations (use a GPU for Whisper if possible, and ensure you have enough RAM and the correct models downloaded). The step-by-step tutorial walked you through opening a video, choosing Whisper or Vosk, adjusting settings, and generating subtitles. We also went over troubleshooting tips for common issues like model downloads, resource usage, and improving transcription quality.

For different use cases, here are some final recommendations: If you need the highest accuracy and are working with content where every word counts (e.g., professional captions, multi-accent interviews, or foreign languages), try Whisper with at least a medium or large model – it’s likely to produce the best results (STILL Can’t install Faster-Whisper-XXL · Issue #8690 · SubtitleEdit/subtitleedit · GitHub). Just be prepared for longer processing times, or use a good GPU to speed it up. If you’re on a lower-end PC or need quick drafts, Vosk with a smaller model can churn out subtitles faster; the text might require a bit more editing, but it’s still much faster than manual transcription. For English-only, clear audio, Vosk’s large model might actually do quite well and nearly match Whisper on accuracy while being speedier. But for noisy audio or diverse content, Whisper’s robustness will shine through (Introducing Whisper | OpenAI). Many users, after experimenting, stick with Whisper because the time saved in editing the output (thanks to higher accuracy and auto-formatted results) outweighs the longer generation time. In any case, Subtitle Edit gives you the flexibility to choose either engine with just a menu selection, so you can’t go wrong – you might even use Whisper for one project and Vosk for another, depending on needs.

By following this comprehensive guide, even a complete beginner should be able to set up Subtitle Edit on Windows and generate subtitles using AI. The combination of detailed instructions and visuals above should make the process clear. Give it a try with a short video to get the hang of it. Once you see the subtitles appearing automatically, it feels a bit like magic. With a little cleanup, you’ll have professional-quality subtitles ready to go. Happy subtitling!

By now, you’ve mastered how to auto-generate subtitles in Subtitle Edit using Whisper and Vosk. With this guide, you can create subtitles faster and more accurately, making AI-powered subtitle editing a seamless experience.

But beyond AI and subtitle editing, understanding great content itself is just as important!

If you’re interested in deep storytelling and mecha design, check out these insightful articles:

👉 The Gundam One Year War – A deep dive into the history and lore of the One Year War in the Gundam universe.

👉 The Z Gundam: A Comprehensive Analysis of Its Design – An in-depth look at Z Gundam’s unique design, engineering, and evolution in the franchise.