🌍 Introduction — The Rise of AI Fake Humans

In 2025, artificial intelligence no longer just generates data or assists with code — it generates people. From smiling faces in advertisements to photorealistic “donors” in charity campaigns, the digital world is now filled with AI fake humans that look, act, and even “feel” real.

Recently, an online controversy over AI-generated donor images reignited global debate on AI image ethics 2025. The question is not simply how realistic AI images have become, but how real we allow them to feel.

As synthetic identities become indistinguishable from authentic human presence, society faces a deeper crisis — a crisis of trust. The line between creation and deception blurs, and ethics become more than a philosophical concern; they become a necessity for survival in the information age.

Table of Contents

🧩 The Technology Behind AI-Generated Images

AI image generation tools — such as DALL·E 3, Midjourney, Stable Diffusion, and Adobe Firefly — are based on diffusion models and latent space mathematics. These systems analyze millions of human faces and artistic styles to create synthetic humans that do not exist but appear convincingly real.

These tools democratize creativity. Anyone can produce a high-quality portrait or marketing image within seconds. However, the same power also introduces ethical complexity — who owns an AI-generated image? Who ensures it doesn’t mislead or exploit?

In the age of instant content, AI image ethics 2025 has become one of the most critical debates shaping how humans interact with truth itself.

🎭 When “Fake” Feels Real — The Psychology of Synthetic Identity

Humans are naturally wired to trust faces. A single smile or gaze triggers emotional empathy. That’s what makes AI-generated images so powerful — they bypass critical reasoning and appeal directly to emotion.

In the case of AI fake humans, the viewer’s mind fills in the blanks. A synthetic face looks friendly, trustworthy, and real, even when no actual person exists. This psychological vulnerability fuels both commercial manipulation and political propaganda.

The result? The world’s emotional attention can now be algorithmically engineered.

As AI image ethics 2025 gains attention, experts warn that we are not simply facing a technical issue, but a cognitive manipulation crisis — a world where emotion replaces evidence.

⚖️ Ethical Pillars: Transparency, Consent, and Accountability

To navigate the deepfake era, three ethical principles stand as the foundation of AI image ethics 2025:

- Transparency — Every AI-generated image must clearly disclose its synthetic nature.

Without it, audiences are unknowingly deceived, regardless of intent. - Consent — Training data should not include private or identifiable human faces without permission.

Current lawsuits against AI companies highlight how easily digital likenesses are exploited. - Accountability — When an AI-generated image spreads misinformation or harm, who takes responsibility — the developer, the user, or the algorithm itself?

These principles are echoed across international frameworks like the OECD AI Principles (2019), UNESCO’s AI Ethics Recommendations (2021), and the upcoming EU AI Act (2024), which aim to regulate transparency and accountability in creative AI outputs.

Yet enforcement remains weak. The technology evolves faster than any legal response, leaving ethics largely in the hands of creators — or worse, marketers.

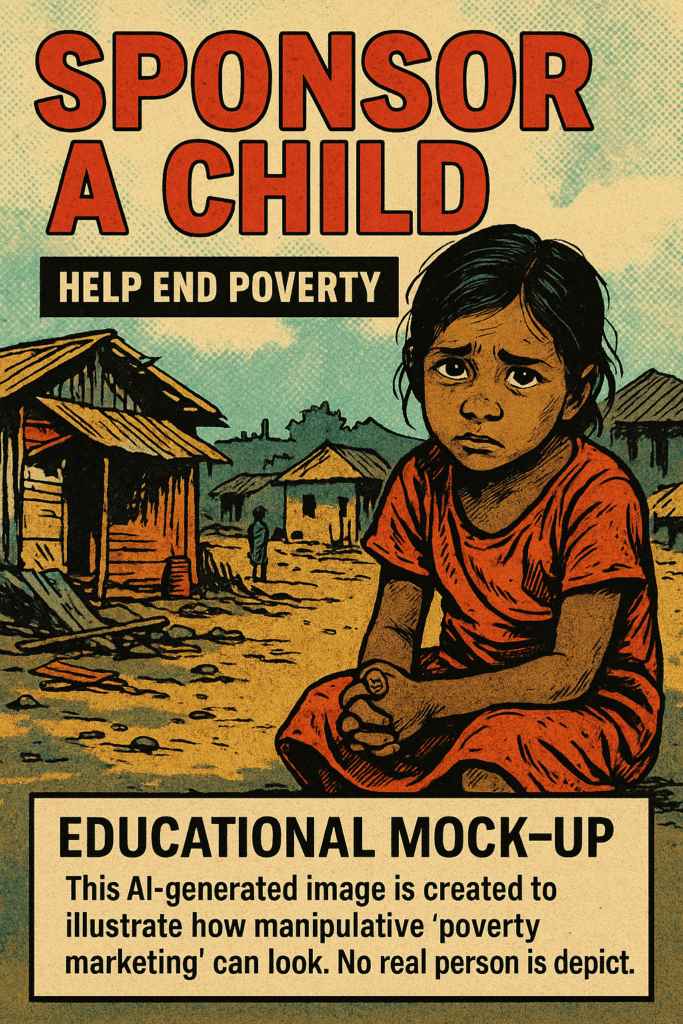

🔍 The “Fake Donor” Case — A Mirror of a Larger Problem

The recent incident where AI-generated donor photos were used in a promotional campaign illustrates a broader ethical failure.

At first glance, it may appear to be an isolated misuse of technology. But it exposes a deeper issue: intentional emotional manipulation.

If an organization presents AI-created people as genuine supporters, it transforms empathy into deception.

This is not about AI’s creative potential — it’s about human integrity. The true ethical breach lies not in generation, but in concealment.

AI image ethics 2025, therefore, must extend beyond “who made it” to “how it’s used.”

Just as we regulate food labeling or medical advertising, synthetic media labeling should become a baseline standard for digital honesty.

🧠 Philosophical Dimension — When Simulations Replace Reality

French philosopher Jean Baudrillard once wrote about Simulacra and Simulation — the idea that replicas eventually replace the original until reality itself becomes a copy of a copy.

In 2025, that theory is no longer abstract. It’s embodied in the faces we see online — flawless, diverse, emotionally resonant, but entirely artificial.

When AI fake humans populate our screens, we risk losing our instinct to differentiate truth from simulation.

And when everything looks real, authenticity becomes meaningless.

This is the existential core of AI image ethics 2025 — not whether the images deceive others, but whether humanity begins deceiving itself.

🏛️ Legal Landscape — Regulation Struggles to Catch Up

Despite the growing urgency, the world’s legal systems are still unprepared for synthetic identity regulation.

In the European Union

The EU AI Act is expected to enforce mandatory disclosure for AI-generated content, labeling all synthetic media used in ads or campaigns. However, implementation is uneven and heavily debated.

In the United States

Laws vary by state. Some, like California’s Deepfake Law, penalize AI-generated impersonation, especially in politics. Yet nationwide policy remains fragmented.

In South Korea and Japan

Discussions around “AI ethics certification” and “AI labeling guidelines” have begun, focusing on corporate accountability and consumer protection.

Still, AI image ethics 2025 remains largely self-regulated — a dangerous reliance on goodwill in a profit-driven ecosystem.

🏢 Corporate Responsibility — Ethics Beyond Compliance

Big tech companies increasingly recognize that regulation alone cannot preserve trust.

Google, OpenAI, and Adobe have all pledged transparency initiatives, embedding AI content credentials — invisible metadata that tags images as AI-generated.

Meanwhile, Getty Images banned untagged AI artwork entirely, prioritizing authenticity over quantity.

These actions signal a shift: corporate ethics are becoming a competitive advantage.

Brands that openly disclose AI use are perceived as more trustworthy, while those that hide it face public backlash.

This dynamic is turning AI image ethics 2025 into not just a moral issue, but a business imperative.

📷 The Deepfake Dilemma — From Humor to Harm

Initially, deepfakes were dismissed as internet novelty. Today, they represent one of the greatest threats to public trust.

AI tools can now mimic any celebrity, politician, or private citizen with shocking accuracy. From fraudulent endorsements to revenge porn, the misuse potential is enormous.

Experts estimate that by 2027, 90% of online visual content may involve some degree of AI alteration.

If unchecked, the cost isn’t just misinformation — it’s the erosion of truth itself.

That’s why AI image ethics 2025 emphasizes proactive education:

Every viewer must learn to question, verify, and think critically about the images they consume.

🧩 The Emotional Economy of Synthetic Media

AI doesn’t just sell products — it sells feelings.

Marketers now use AI faces and voices to create campaigns optimized for empathy.

A smile that never existed can raise donations, win elections, or shift public opinion.

This emotional engineering raises the question:

If artificial emotion triggers real human response, is it manipulation or creativity?

The ethical boundary depends on disclosure.

When audiences know an image is AI-generated, they can interpret it critically.

When it’s hidden, manipulation becomes exploitation.

Thus, AI image ethics 2025 must address not only truth but also emotional integrity — ensuring empathy isn’t artificially weaponized.

🔬 AI Trust and Digital Literacy

To rebuild trust in the age of synthetic reality, societies must develop AI literacy — the ability to recognize, question, and ethically engage with AI-generated content.

Educational institutions are beginning to integrate “AI media awareness” into curricula. Platforms like YouTube and TikTok are experimenting with AI-generated content tags.

But genuine AI trust cannot come from technology alone. It requires cultural adaptation — an acceptance that we now coexist with synthetic beings and must define boundaries consciously.

🧭 The Road Ahead — Human Truth in Synthetic Worlds

Looking ahead, the question isn’t whether AI-generated humans will dominate digital spaces — they already do. The real question is whether human ethics can evolve as fast as our algorithms.

To ensure a sustainable digital future, three actions define the roadmap for AI image ethics 2025 and beyond:

- Mandatory Labeling: Every platform hosting AI-generated images should display clear, consistent “AI-generated” notices.

- Ethical Data Practices: Models should be trained on verified, consent-based datasets only.

- Cross-Border Regulation: International alignment on AI ethics standards is crucial to prevent jurisdictional loopholes.

By prioritizing transparency and truth, we can transform AI-generated images from instruments of deception into tools of creativity and education.

💬 Conclusion — The Future of Seeing

“When machines paint human faces, the question isn’t whether they look real — it’s whether we can still tell the difference.”

In 2025, the AI image ethics debate defines the moral frontier of technology.

Every pixel, every smile, every perfectly generated human challenges the meaning of authenticity itself.

But the solution isn’t to fear the fake — it’s to redefine truth for an age where humans and algorithms share the canvas.

Transparency is the new honesty.

Accountability is the new creativity.

And the future of trust will depend on our willingness to see beyond what looks real — to what is real.